Written by Jens Berger | February 23, 2017

Having an accurate video quality measure suited to the targeted service is a critical piece of the puzzle. Other parts of the puzzle include capturing the video, analyzing it in real time, and dealing with errors. A prerequisite for any video quality analysis using a quality measure is the video itself; bitstream-based or hybrid measures require additional information from the IP layer.

Implementing a video quality measure into a real field measurement probe

Capturing images on a smartphone is very challenging. The algorithm expects uncompressed pictures as bitmaps and needs to capture these in real time, considering each frame individually. To measure the true end-user experience, the images should be just as the user will see them. This means that all buffering management and decoding must be left unchanged (i.e., as delivered by the video application).

The video should be captured only towards the end of the transmission process when the images are displayed to the user. The capturing process must be as smooth and light-weighted as possible to avoid any heavy load on the phone. Otherwise, performance could decrease and negatively influence other measurement results that are obtained simultaneously.

In practice, the capturing process and the video quality measure are deeply entwined. Usually, a captured frame is stored in memory and immediately processed. A simple file-based interface, where the video is stored as a file and evaluated later, is not possible due to the uncompressed video’s enormous amount of data. Even a short 10-second full-HD clip would require far more than 1 GB (to be written within the clip’s 10 seconds).

The framework to measure quality of experience

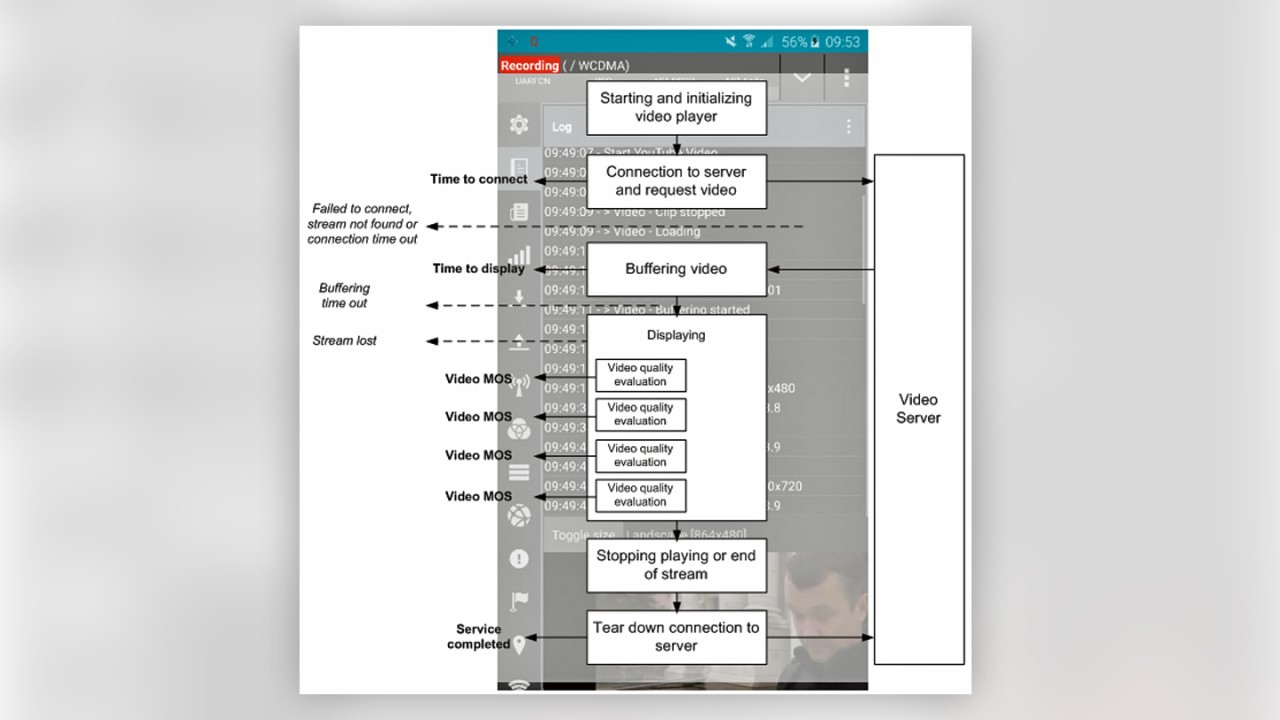

The capturing/analyzing software component is the heart and the brain of the video measurement tool. But, what good are a heart and a brain without a body? A large framework is required to launch and control the video application on the device, apply timings, write and post-process the results, and manage all error cases and unforeseen behavior.

The test application not only has to start and stop the video application; it also has to handle instances where the video is unavailable, or pop-ups appear on the viewer’s screen. Moreover, the test logs all events on the lower layers, including radio and IP levels.

Assuming the test application is up and running, we still have to address more general questions. Is it enough to evaluate only one video service, or should the results of various individual services be compared? If yes, how can a comparison of different video services best be executed?

What results should a video quality measure, i.e., “video test” deliver?

First and foremost, a video test should provide results that quantify the quality a user will experience. Additional results giving more insight or log functions for lower layers might be of interest, but the main outcome is related to the QoE (quality of experience). In the end, a service’s usefulness and acceptance are only related to its recognition by a user; hidden and invisible process markers are secondary. This end-user-centric approach can be broken down into a few primary metrics, including:

1. Is the requested video accessible?

2. How long is the wait before the video starts to play?

3. What’s the quality like? Are the images sharp and crisp? Are there any pixel or image errors?

4. Does the video freeze during playback?

5. Is the video “lost” and stops playing?

Not all of these metrics have a unique focus on image analysis; however, they are just as important. In technical terms, they can be analyzed as follows:

1. Success statistics (percentage of Completed View, Failed Access, Lost Video)

2. Time to first picture (waiting time)

3. Visual quality as MOS score (picture quality)

4. Ratio of freezing during display time (video fluency)

Looking at these metrics, it becomes clear that they apply to all video services. Regardless of a service’s particular implementation details, these measures touch upon all important influences and can be applied to measuring all mobile video services. Consequently, the European Telecommunications Standard Institute (ETSI) adopted this QoE-driven approach in their latest Annex A to TR 101 578.

The measurement flow to obtain these metrics reflects the user’s approach: start the video service, request a video, and start playing it (or not). Once the video is finished or the user stops it, the test is over.

The measurement approach is independent of the service type. It can be applied to both video-on-demand and live video. The approach applies to any download strategy, regardless of whether it is progressive, applies re-buffering, or is based on a continuous real-time video stream.

Is there a single integrative score for video QoE?

Fair question. There is no nominative integration formula for individual metrics into a single score. It is not easy to define an overall integration formula because a video service’s QoE is driven not only by hard metrics but also by soft factors. For example, user expectations for a free video service differ from expectations for paid services. They also depend on the content and differ from news to weather forecasts to movies or music clips. Moreover, the user’s viewing environment also matters: it makes a difference in terms of user expectations whether an individual watches the mobile video at close range or in a group and from a distance?

Equipment vendors and network operators are busy weighting and combining individual scores into one rating. Similar tendencies prevail: freezing occurrences are weighted strongest, followed by visual quality and waiting time. The success ratio, meaning the video is accessible, is high in today’s networks. In the overall network view, only attempts that fail during a drive test are taken into account.

How can a video quality measure be used for network optimization?

Automated testing of video services in real field mobile networks has just begun. The first measurement tools are now available, and soon sufficient experience will be available to substantiate the impact of QoE on mobile video services.

The results of mobile video testing provide insights for various stakeholders. Network operators want to optimize their network for video services; content and service providers want to compare their services against competitors, and authorities need answers to network neutrality. All answers require testing with comparable KPIs and trigger-points.

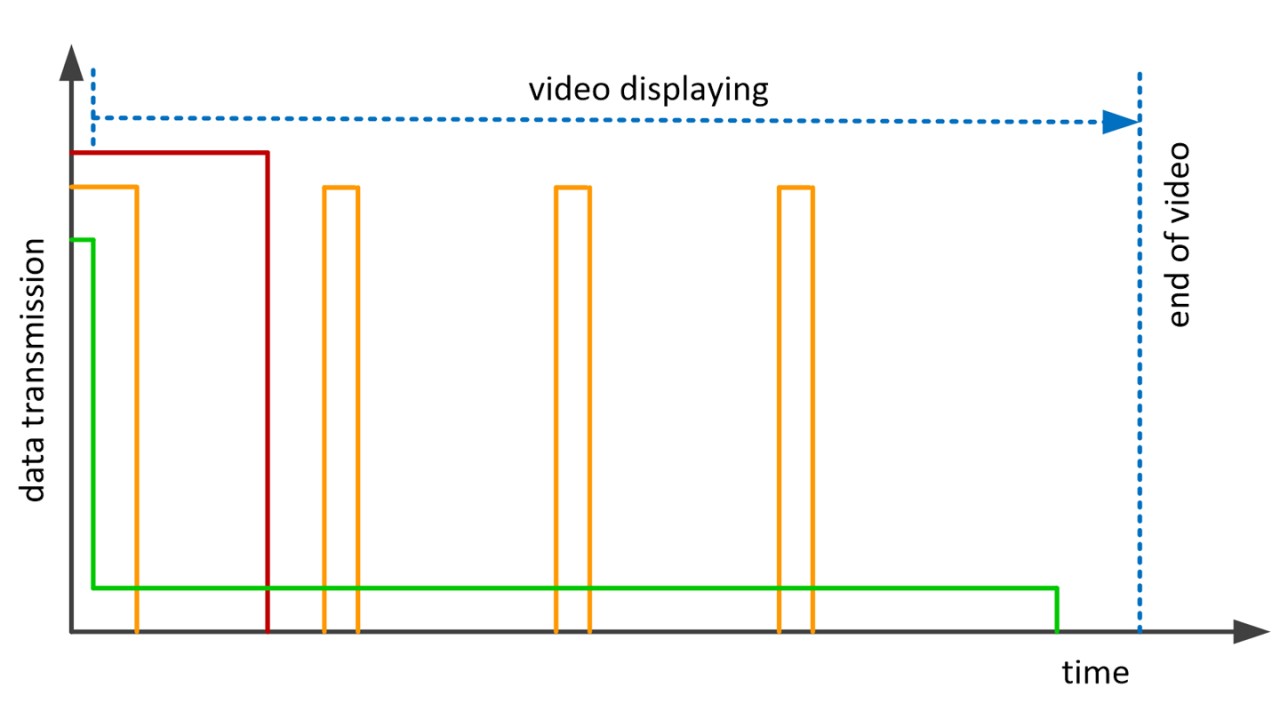

One crucial question remains: how can a network be improved and optimally prepared for video services? Of course, a high-speed data network will generally behave well for video services. But in the end, each video service’s streaming and buffering strategies define how air interface resources are used. In this regard, traditional pre- and progressive download approaches offer network operators the biggest advantages.

These downloads are treated as file downloads. The faster a video is downloaded, the sooner it will play. Once started, the video is then fed via the local buffer, and a network connection is no longer needed. Using those types of video services for testing is advantageous in networks with high static speed. However, the test will not deliver more information than a plain HTTP download. Moreover, an unfinished video will waste a lot of resources, which is never in an operator’s interest.

Streaming and buffering strategies for video transmission

If the service delivers the video chunk-wise, as is usually the case nowadays, the situation is almost the same: the network must transport a considerable amount of data into the buffer of the phone in a very short time. There is – depending on the frequency of the chunks – certain flexibility at what point the chunk must be delivered. A well-filled buffer in the phone can bridge non-connectivity to the network for quite some time. Nevertheless, the network has to frequently provide transport capacity to enable a timely video transmission.

Near real-time streaming is the most challenging task for a network. With almost no video in the buffer, the network must refill it continuously. This not only requires a certain transport capacity but also a continuously available capacity. While the first two video services are quite robust against network outages, the latter is very sensitive to throughput limitations and outages.

A real-time video quality measure is most effective

In view of the most popular video services delivering chunk-wise and all video services coming closer to near real-time transmission, the most effective way to evaluate a network’s readiness for video services is using real-time live video services such as YouTube or Netflix.

With our unique real-time video quality analysis application running on all Android smartphones and supporting YouTube and Netflix, we offer the most in-depth measurement approach. The basis for video-MOS prediction and ITU-T-approved no-reference measure J.343.1, it uncovers outages and limitations in data transport, discriminates more powerful between networks, and separates the wheat from the chaff.

Remember: the same live video is a look into the future. Live video and other real-time applications will become more and more popular. Networks optimized for live video today, are optimally prepared for the real-time services of tomorrow.

Stay online, and measure the future today!

Find more information about mobile video quality testing on our dedicated web page.