Written by Johanna Sochos | February 22, 2018

In this blog series, we want to answer some questions: How can the quality of experience (QoE) of interactions with data smartphone apps be measured? What are the characteristics of such data transfers compared to plain HTTP transfers? What is going on in the background? And how should this knowledge be used for mobile network testing?

KPIs of mobile data application tests

Classical network tests are usually based on very technical parameters such as HTTP throughput, ping response time, and UDP packet loss rate. Trigger points for measuring durations of certain processes are often extracted from the RF trace information of the phone or the IP stream because the structure of the test is known, and the protocol information remains unsecured.

When a test is created for a certain service offered by a smartphone app, these possibilities are very limited. There are many differences compared to classical data tests:

- The IP traffic between client device and service is usually encrypted, and the payload cannot be analyzed.

- The app’s data handling is hidden. Both the client or the server might perform data compression at some point; this takes time and might be part of the test duration even though nothing network-relevant is happening.

- The server hosting the service belongs to a third party and cannot be controlled by the network operator or the company conducting the measurement campaign. In addition, the service may behave differently from test to test, or over time, because of dynamic adaption and changes in the app and server setups.

- Apps are consumer software with certain instabilities and are subject to frequent updates. Often, the user is forced to update to the newest version to keep the service working.

- The services are subject to change, and even with the same app version the network interaction may change suddenly due to server influences.

It is, therefore, not possible to create a technical mobile data application test to analyze those services under reproducible conditions. It is often not even possible to see intermediate trigger points in IP or RF. Only information on the user interface is available to evaluate those consumer services.

Throughputs, transfer and response times, and other technical measures cannot be obtained with confidence and are influenced by the server and client internal setups. They, therefore, do not reflect the network performance and provide almost no valuable information for network characterization.

What drives user perception?

When measuring and scoring the user’s perception and satisfaction with these services, technical parameters are not a valid indicator anyway. If technical parameters are not applicable to measure QoE, we should ask: What drives user perception and satisfaction?

The most reliable way to measure a subscriber’s perceived QoE is by directly watching the user interface. This is the only information the user has. Consequently, the user experience can only be determined by visible information to the user. All sorts of feedback are provided, and the main criteria are, of course, the success of the task and the time to finish the project, e.g., to download something or to receive confirmation that a message has been delivered, i.e.:

- Can the desired action or set of actions be successfully completed?

- Is the overall duration short – or better – short enough based on the user’s experience?

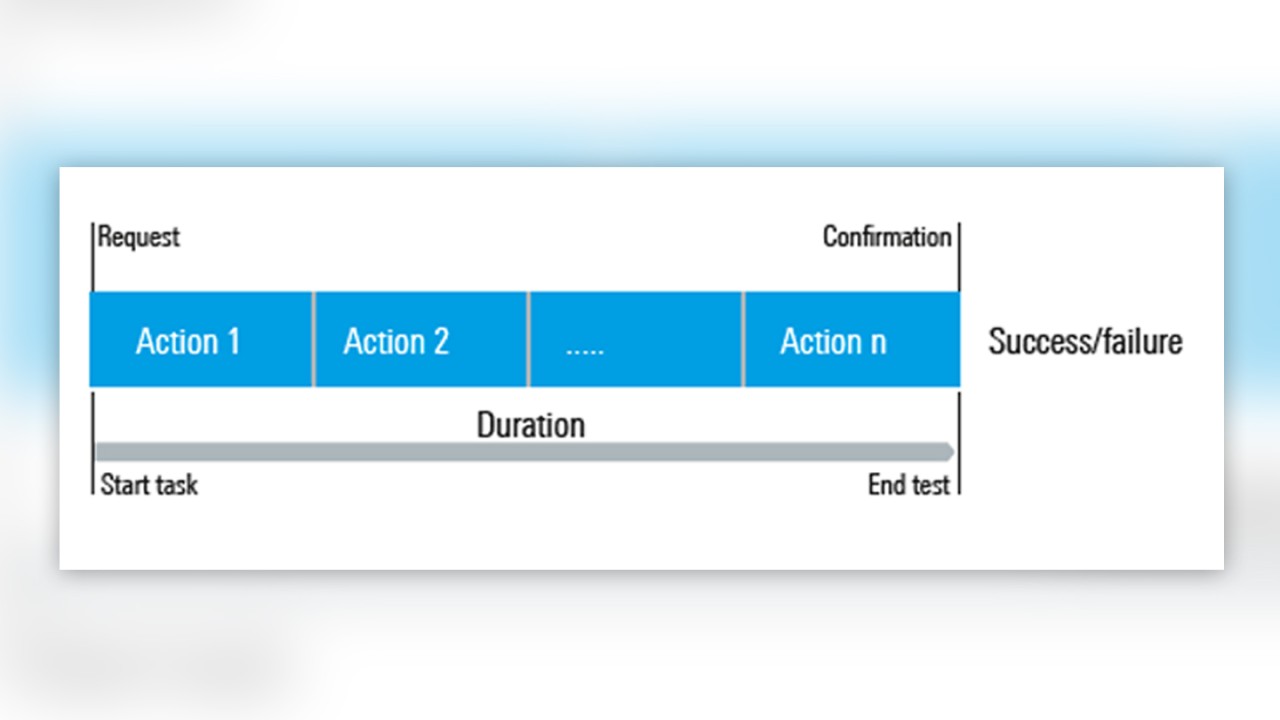

The figure below shows the schematic timeline of a mobile data application test. The user starts with a request (e.g., opens the home screen of Facebook) and finishes his interaction with the service after 1 to n individual actions (like posting a picture and commenting on another post). The user experience will be good if all actions can be successfully completed, and the overall duration is short.

It should be noted that the time to finish a task is still a technical parameter. It should not be linearly translated into a QoE score such as a mean opinion score.

The dependency between the technical term ‘time’ and the perception of waiting for the expected fulfillment is not linear; there are saturations at both ends of the scales. A shorter time will not improve the perceived QoE if the duration is already very short, and a bad QoE may not get worse if the duration increases.

The translation of task duration (time) into QoE is an ongoing task. It depends on the type of service and the (increasing) expectation of the subscribers and the feedback culture of the service, e.g., intermediate feedback or other diversions, maybe even smartly placed advertisements.

Transfer duration goes into saturation

Germany-wide drive and walk tests provide large scale, real-field insights. During the test campaign with the Rohde & Schwarz mobile network testing benchmarking solutions Benchmarker II (in combination with the Vehicle Roof Box hosting the probes) and Freerider III, 12,000 km test routes were covered and 400,000 data measurements collected.

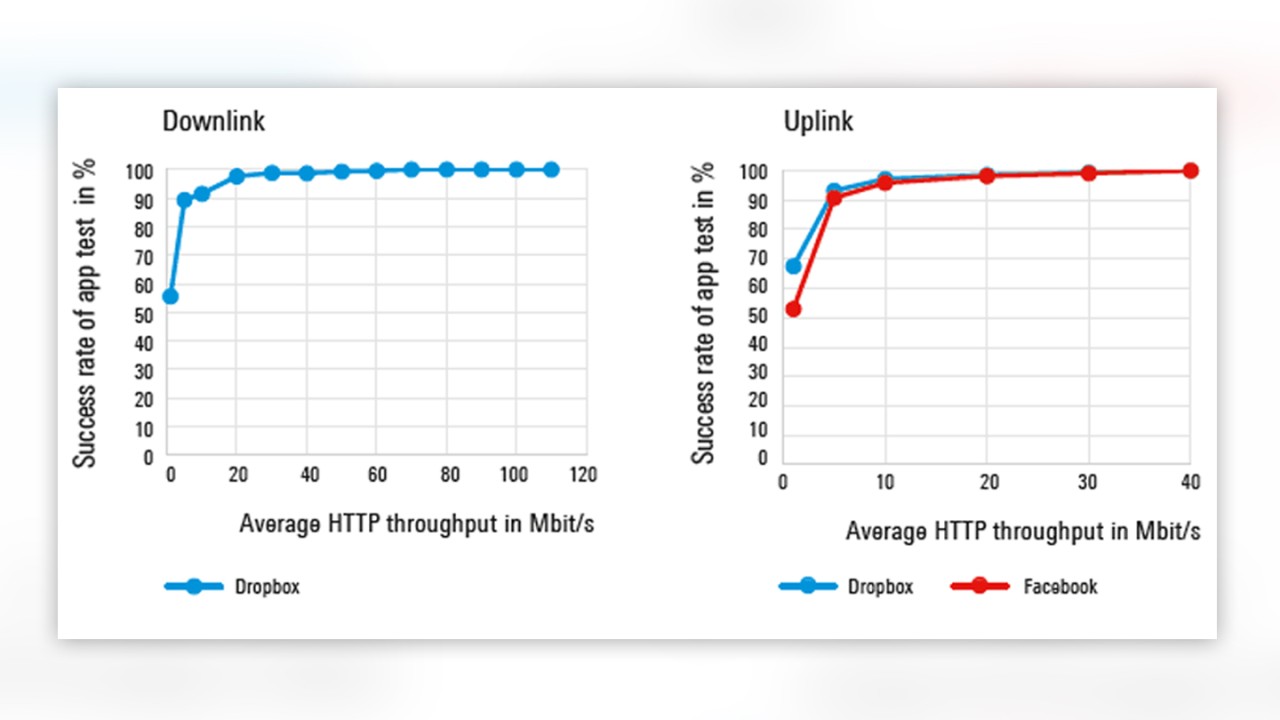

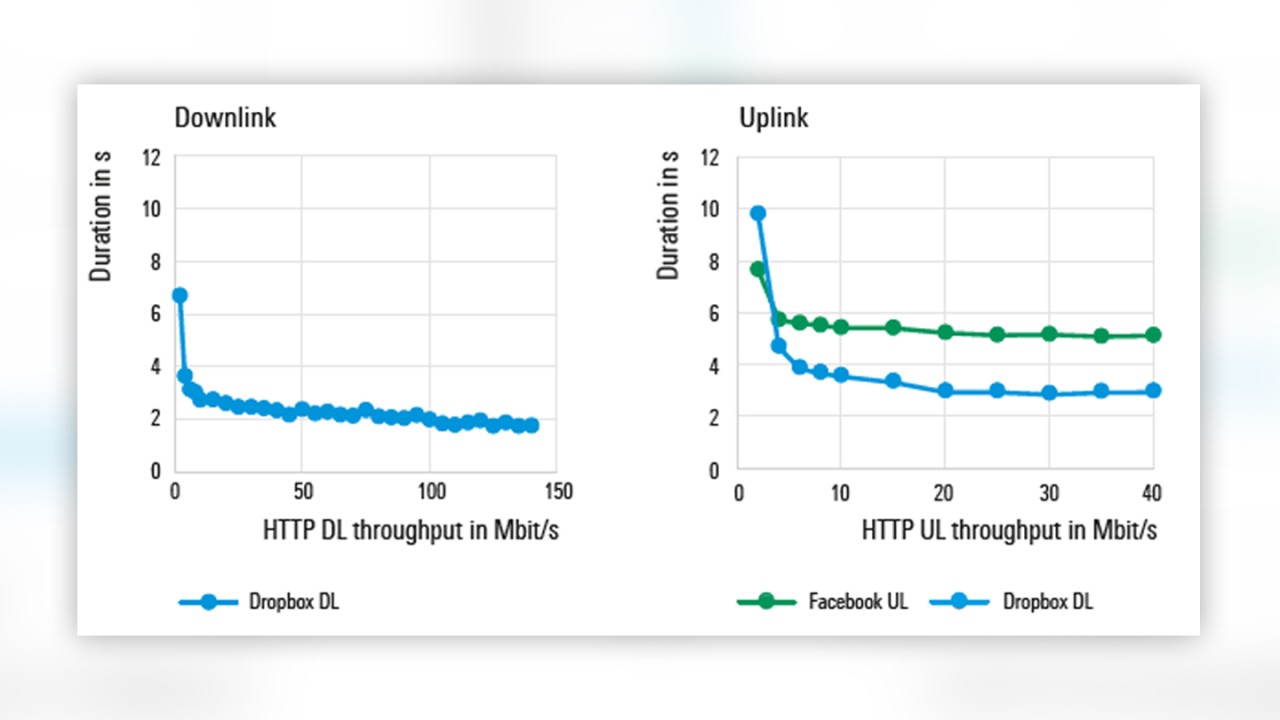

In this campaign, the durations for transferring 1 MB files to Dropbox and Facebook were measured. Comparing them to the measured throughputs in classical HTTP transfer tests leads to the following pictures:

- The success rates are reaching values close to 100% for available plain transfer speeds of around 20 Mbit/s.

- The measured duration is almost saturating beyond a throughput of 40 Mbit/s for downlink and 10 Mbit/s for uplink tests.

This means the QoE for Facebook and Dropbox tests based on transfers of typical 1 MB images in our test setup is not improving anymore for higher available transfer speeds. This might be a piece of interesting information when optimizing a network.

Where is saturation coming from? What is going on in the background when using a service such as Facebook?

Learn more and read parts 2 and 3 of this series:

Measuring QoE of mobile data applications: Behind the scenes (part2)

Measuring QoE of mobile data apps for network optimization (part 3)